Deepfakes, voice synthesis, and ChatGPT

Please note: This post was written by Highlander prior to their rebrand to FluidOne Business IT - Sheffield.

We’ve written previously about the danger posed by deepfakes and synthetic media – lifelike visuals and images created by generative AI platforms. These advancements have a number of exciting and legitimate uses but their emergence has also presented new opportunities for cybercriminals.

As innovation around AI continues to accelerate, we wanted to revisit the world of synthetic media and generative AI to explore some new developments that not only promise significant benefits for individuals and businesses but further exploit opportunities for bad actors.

Deepfakes

While we’ve covered them before, deepfakes continue to develop and are becoming increasingly prominent. Deepfakes use AI to manipulate a subject’s face: either masking them entirely, or making them appear to be doing and saying things. This has many legitimate uses – streaming software from Nvidia, for example, can now use deepfake capabilities to make it appear like a presenter is looking at the camera so they appear more engaging to their audience.

Away from the potential benefits, there are growing avenues for the misuse of this technology. As a recent example, an unknown bad actor used deepfake technology to disguise themselves as the Mayor of Kyiv, Vitali Klitschko in a call with the Mayor of Berlin late last year. It should go without saying that if real-time deepfakes can be used to potentially manipulate prominent politicians, then they can have a devastating impact on the security of a business.

What’s becoming more worrying is the rise of deepfakes in conjunction with synthetic identities. Essentially, a hacker can generate a whole new person out of thin air, complete with counterfeit documentation to prove their identity. In our previous blog we highlighted examples of cybercriminals interviewing for jobs using false identities in an effort to gain access to internal systems. Now, with falsified identification documents at hand to back them up, the risk of compromise could be far greater.

Voice synthesis

Faces aren’t the only thing AI is learning to manipulate. Earlier this year, Microsoft published a paper discussing a new AI they’ve named VALL-E, which can reportedly take a 3-second long clip of a person speaking, and use it to generate endless new clips in that person’s voice.

This has the potential to revolutionise text-to-speech (TTS) as we know it. For example, if a famous actor wants to lend their voice to a series of audiobooks, they no longer need to record hours of content for each book. Instead, they could provide a small clip of their voice for the AI to use, and have the audiobooks generated automatically. As the AI also doesn’t require new voice data, one single clip could theoretically allow this to be done indefinitely, allowing a voice to be preserved even as the actor behind it ages. The results so far are impressive – take a listen to some of the clips in Microsoft’s demonstration, and try to spot the difference between the “Ground Truth” clips, where a voice actor reads through the text, and the clips generated by VALL-E.

Despite these exciting developments, this technology is also ripe for abuse. Requiring such a small sample of voice data to produce a replication means that bad actors could take incredibly small snippets of a conversation and use them to generate convincing voice messages – a cybercriminal could take a clip of a CEO giving an interview on the news and use it to create a voicemail asking a member of their team to transfer funds to a new account, for example.

Thankfully, Microsoft is yet to open this technology up to public use, and the researchers behind it are already proposing ways to ensure that this technology is only used legitimately.

ChatGPT

News about ChatGPT has been hard to avoid recently – this ground-breaking AI can generate answers to seemingly anything, and present them in casual, conversational English.

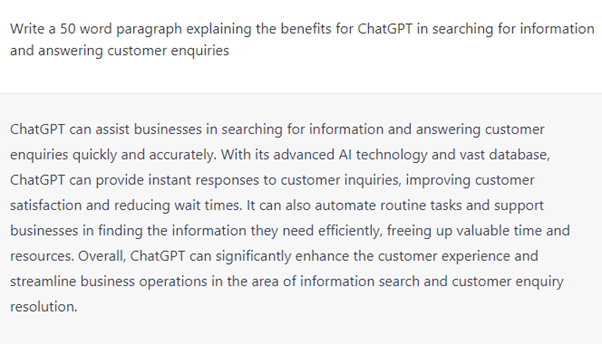

ChatGPT can assist businesses in searching for information and answering customer enquiries quickly and accurately. With its advanced AI technology and vast database, ChatGPT can provide instant responses to customer inquiries, improving customer satisfaction and reducing wait times. It can also automate routine tasks and support businesses in finding the information they need efficiently, freeing up valuable time and resources, and doing so in an understandable, conversational manner.

If you haven’t used ChatGPT yet, or come across an example, here’s a small window into what it is capable of. That entire last paragraph was generated by the AI (albeit with a few edits of our own at the end).

It’s not perfect, often struggling with technical details, making up information out of thin air, and talking in circles. But there’s no doubt that the ability to quickly and easily generate responses to questions could revolutionise the way we approach things like search engines.

However, ChatGPT being a completely open tool means it’s certainly prone to abuse. Experts have already called out a number of concerns around the technology, especially concerning how it can be used to facilitate social engineering attacks.

Social engineering relies on convincing a user to open up their systems to a bad actor. It can take a range of different forms, but one of the most common is phishing, where cybercriminals will send emails to a user attempting to convince them to click on a certain link or provide their credentials, which the attacker can then use to compromise a system. One of the most noticeable signs of phishing has been that low-level phishing attacks often look odd, with grammatical mistakes and an inconsistent writing style leading to unconvincing attempts to manipulate a user. But with ChatGPT readily available, cybercriminals can endlessly generate conversational, convincing content for phishing emails with minimal effort.

What's next?

New innovations and technologies are always on the horizon, but there are a number of best practices you can use to ensure your organisation is less likely to be at risk. Training your people to be more aware of potential risks, and arming them with the knowledge to identify threats is always key, as is ensuring your security estate is being put to effective use. If you’re looking to do the latter, cybersecurity vendors like Arctic Wolf can help you reinforce your defences and maximise the value of your existing posture.

If you’d like to know more about what we can do to help improve your business’ cybersecurity, reach out to us for a chat.